Last week, I was in Austria for a workshop on phase retrieval at the Erwin Schrodinger Institute, University of Vienna. The workshop, organized by Karlheinz Grochenig and Thomas Strohmer, started with three days of very interesting talks, and the rest of the week was devoted to open discussions and collaborations. This was my first workshop, but it was so good, I’m afraid future workshops might fail to meet my exceedingly high expectations!

First, Stefano Marchesini showed us how phase retrieval is used in applications to make sense of different types of small-scale measurements. He started with a brief overview of X-rays, and then explained something amazing that I had never heard before: When light waves pass by an object and are measured in the far field, Huygens’ principle says that the measurements give the pointwise absolute-value-squared of the Fourier transform of the object. Coupled with a sparsity assumption on the object, this has enabled researchers to determine the structure of small things like NaCl and DNA. Today, we’d like to resolve more complicated structures, such as biological macromolecules, but this is hard (probably because such objects are particularly exotic, and so it’s harder to pin-point the best structural assumptions). The most popular phase-retrieval algorithms are based on alternating projections (e.g., project onto what the data says, and then onto the sparsity assumption, and repeat), but these algorithms don’t work all the time. Since phase retrieval is the point of the entire workshop, it was nice to start off with this well-presented motivation.

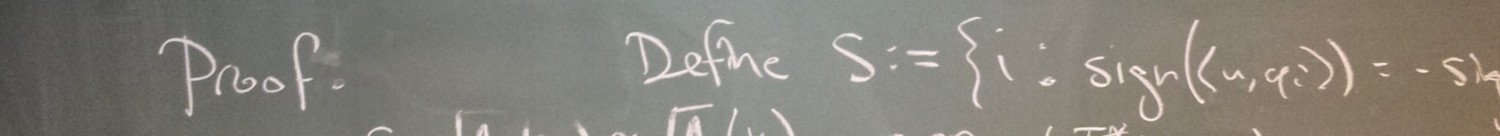

Next, Thomas Strohmer formally posed what are arguably *the* phase retrieval problems. Specifically, we want to recover from Fourier intensity measurements

, but this is imposible due to lack of injectivity in measurements (even after identifying signals which are equal up to a global phase). As such, we need to do one of two things: either

(i) make an additional structural assumption on , or

(ii) take additional intensity measurements.

Both of these are feasible solutions in the real world; indeed, Stefano explained that (i) is already the approach used in practice, albeit with room for improvement, and (ii) is possible by, for example, using different coded apertures over multiple exposures. Thomas also presented PhaseLift as preliminary work towards these ultimate goals, specifically for approach (ii). The main idea here is that we can restate the phase retrieval problem as a rank-minimization problem over positive semi-definite matrices subject to linear measurements, and that the rank minimization program can be relaxed to a trace-minimization program, much like the L0-to-L1 trick in compressed sensing. To date, the guarantees for trace minimization work with high probability for randomly chosen measurement vectors (leveraging the strength of concentration inequalities, etc.), so it remains to be shown how to prove these theorems with Fourier measurements. Thomas hinted with a simulation that random masks on Fourier measurements could very well be the next big step in this direction.

After lunch, Philippe Jamming showed us several neat tricks for phase retrieval, but I’ll only write about a couple of them. First, he gave a nice demonstration that phase actually captures a lot of important information about signals. He did this by taking two images (of faces), taking their Fourier transforms, keeping their magnitudes but swapping their phases, and then taking their inverse Fourier transforms. The result was a face-swap (due to the phase-swap). Yes, there were strange artifacts in the resulting images, but it was very clear after seeing this that the phases somehow capture the majority of the information. (I wonder how one could make this rigorous…) Next, Philippe showed us his take on Wright’s conjecture:

Wright’s conjecture. There exists a unitary transform such that every function

is determined up to a global phase by

,

and

.

Recall from a previous post of mine that this is impossible in finite dimensions since dimensions are required to smoothly embed

-dimensional projective space. Understanding that infinite dimensions could very well make a difference, Philippe conjectures that the fractional Fourier transform

is one such

, provided

is irrational. This would be particularly nice for quantum mechanics (from whence the conjecture originated), since

corresponds to Schrodinger’s time evolution operator at time

for the harmonic oscillator, and so this would confirm a suspicion of Vogt that position, momentum and time could together determine pure states.

Viewing the Candes-Strohmer-Voroninski results as preliminary results for the workshop, one significant point can be made: These original results used an adversarial noise model, whereas a stochastic noise model is probably more appropriate in some applications. Before we adjourned for the first day, Radu Balan presented some of his recent work on phase retrieval, which specifically addresses stochastic noise. In his work, the goal of reconstruction is to find the maximum likelihood estimator under a Gaussian-noise assumption, but this optimization is most naturally posed over all rank-1 symmetric matrices, which is not an easy set to optimize over. As such, he adds a few terms to the objective function to make it convex over all symmetric matrices, and optimizes using an iterative least-squares routine. He showed us some results in terms of Cramer-Rao lower bounds, along with some numerical simulations that illustrate convergence of his algorithm.

I started off Day 2 by describing the polarization technique I blogged about way back when. I concluded my talk by describing a noise-robust version of the technique, but I’ll save those details for a future blog post. I will say that the stable version has two particular features of interest: First, instead of propagating relative phase information over a spanning tree (which inevitably accumulates significant error in the leaves), we account for the pieces of relative phase information in a more democratic fashion using a new spectral-type method called angular synchronization. Second, since the last step of the polarization technique applies the pseudoinverse corresponding to some subcollection of vertices, we guarantee that this pseudoinverse is stable by requiring the vertex vectors to form a numerically erasure-robust frame.

The next two talks (given by two of my polarization collaborators) focused on these specific features of the noise-robust technique. In particular, Afonso Bandeira went into detail on how a natural generalization of angular synchronization can solve a general class of important problems:

Suppose you are given a graph , and you’re told that each vertex

is labeled by some undisclosed member

of a group

. Given a noisy version of

for each edge

, how might you reliably and efficiently estimate

?

This type of problem comes up in cryo-electron microscopy, image registration, phase retrieval, and elsewhere. Similar to phase retrieval, you are not given enough information to distinguish from

, regardless of

(this is the “global phase factor”). Despite this ambiguity, the optimal solutions to this problem can be characterized with combinatorial optimization, but such programs tend to be rather inefficient. Thankfully, Afonso is able to relax the combinatorial objective function to one whose optima can be easily expressed in terms of eigenvectors of a matrix called the connection Laplacian, which is built from the noisy versions of

in terms of faithful matrix representations. Furthermore, when the group is sufficiently nice, such as

, Afonso can demonstrate that optima in the relaxed program are within a factor of optimal in the original combinatorial program. The analysis breaks down when the group isn’t nice enough, such as

; otherwise, Afonso would have inadvertently shown that the Unique Games Conjecture implies P=NP.

Next, Matt Fickus discussed our recent work on numerically erasure-robust frames (NERFs), specifically the use of large symmetry groups and explicit -nets to evaluate the NERF bounds of certain frames in sub-exponential time. He wanted to keep us on time for the coffee break, so he didn’t give too many details, but check out my previous blog post on the topic if you want to read more.

After some coffee, we got to enjoy a particularly elegant presentation by Irene Waldspurger. First, she showed us some results concerning intensity measurements with Cauchy wavelets. In this part of her presentation, I was particularly impressed by some intuition she provided:

Let and

denote low- and high-pass filters. Then given an audio signal

, the human ear does not distinguish between

and

.

That is, the relative phase between low- and high-frequency components is not captured by the human ear, and she demonstrated this by playing two “different” versions of the same song on her computer. Very neat. The second part of her talk concerned PhaseCut, which she presented as an attractive alternative to PhaseLift. First, the optimization program can be viewed as an instance of MaxCut, and so we can leverage those solvers accordingly. Next, denoting the optimization-less version of PhaseLift by “weak PhaseLift”, she was able to convey some very interesting relationships between weak PhaseLift and PhaseCut. For example, the rank-1 projection is a solution of weak PhaseLift precisely when

is a solution of PhaseCut, where

denotes the intensity measurements collected. Also, if weak PhaseLift is stable in a particular instance, then so is PhaseCut, and furthermore, there are instances in which simulations reveal that the converse does not hold. For example, if

is (nearly) sparse (think real-world spectrograms), then PhaseCut is stable but weak PhaseLift is not.

Next, Albert Fannjiang showed us his state-of-the-art results on intensity measurements with Fourier masks. Remember: Thomas told us these actually have an important real-world implementation (coded-aperture-based exposures), so this is a particularly significant research direction. The main idea is to view each Fourier mask as a diagonal operator, and then we want each diagonal entry to have unit modulus, since otherwise we will be wasting energy in our exposure. If we draw the phases of these diagonal entries from a continuous distribution, then Albert can show that the resulting Fourier masks have many interesting properties (such as different types of injectivity) with certain (large) probabilities. He also presented an alternating-projections-type algorithm which leverages FFTs for speed, and he can guarantee convergence. It’s always rather nifty whenever the Fourier transform comes up in nature, and then you can analyze things with the unreasonably effective FFT implementation.

Martin Ehler gave the last talk of the day, in which he introduced a neat generalization of the phase retrieval problem: Instead of receiving the sizes of inner products with certain measurement vectors, you get the norms of projections onto certain measurement subspaces. To solve this problem, Martin takes inspiration from two particularly influential papers in the phase retrieval literature. First, he builds cubatures in the style of Balan-Bodmann-Casazza-Edidin, which require the number of measurements to scale like the square of the signal dimension. Next, he generalizes PhaseLift to establish that far fewer measurements (i.e., a constant multiple of the dimension) can be used. In both cases, his description of the analysis reveals how difficult it can be to prove things about subspaces instead of mere lines. This is not too surprising to me, having spent a bit of time thinking about fusion frames, so from my perspective in particular, it’s nice to see progress.

The last day of talks was particularly exciting. Bernhard Bodmann started it off by describing a particular method of phase retrieval: “from the roots.” Here, Bernhard considers the case where the measurement vectors form a certain harmonic frame. Specifically, take the discrete Fourier transform matrix and collect the first

rows; then the resulting columns are the

-dimensional measurement vectors. Note that if the original signal is known to be real, then

measurements are injective whenever the measurement vectors are full spark, as this particular harmonic frame is (since all

submatrices are Vandermonde with distinct bases). Analogously, Bernhard exploits the Fejer-Riesz spectral factorization theorem to say that these measurements completely determine signals from another interesting class. To be clear, identify a vector

with the polynomial

; then Bernhard can uniquely determine the vector if the roots of the corresponding polynomial all lie outside the open unit disk in the complex plane. In general, Bernhard can actually say the polynomial is one of

possibilities; here, the only ambiguity is whether a given root is at

or

, that is, we can flip any root from outside to inside the disk. This is fantastic, since this is precisely how much ambiguity we have in the real case after taking only

measurements, and in that case, we know it suffices to take only

additional measurements. In the complex case, it is unknown exactly how many measurements we need for injectivity, but we’re starting to believe the number is

. This is mainly because we know

is necessary and sufficient, and furthermore, when the dimension is

, we know

is the correct number of measurements. Next, Bernhard made a significant stride toward closing the

conjecture: Taking inspiration from Philippe Jamming’s talk on Day 1 (wow!), specifically his reasoning behind why the fractional Fourier transform might settle Wright’s conjecture, Bernhard constructed

measurements which give injectivity! In addition to taking the

measurements from before (viewed as equally spaced points on the complex unit circle), he also takes

measurements corresponding to equally spaced points on another unit circle in the complex plane, this one being the image of the real line under a specially chosen (think “sufficiently irrational”) Cayley map. But wait a second – that’s a total of

measurements, right? Well, Bernhard picks the second circle in such a way that it intersects the first at two points, and that these intersection points correspond to measurements from both circles, so we might as well throw away two of them. Very sneaky! The rest of the talk concerned efficient and stable reconstruction from these sorts of measurements. In the end, he was able to demonstrate stability against “continuous perturbations,” which means there is some stability, but we don’t know exactly how much. To see how much stability there is, he showed us a promising simulation in which the original signal is sparse (as it tends to be in the real world, based on Stefano Marchesini’s talk).

Bernhard’s was a tough act to follow, but Yang Wang did it well. He presented a construction which, in the real case, uses less than intensity measurements to completely determine any

-dimensional signal. He didn’t say, but in the complex case, the constant probably changes from 2 to 3 or 4. And since

in the real world, this is particularly few measurements. The main idea is to start by determining the size of each entry (i.e., measure with the identity basis). After this step, it suffices to determine relative phases between the entries. This is the same idea captured in the polarization technique described in my earlier blog entry. However, his method differs in that he recursively determines the relative phase between chunks of vector entries. First, entries are paired up to find agreement, then pairs are paired up, etc. To find agreement, a full spark frame is applied in concert with a polarization trick to extract relative phase. In the end, the construction uses particularly few measurements, and comes with a particularly fast recursive reconstruction algorithm, taking only

operations. Yang concluded by describing some preliminary work on noise robustness. Based on my experience, I suspect Yang will need a stronger version of full spark to produce reasonable stability guarantees.

The final talk was given by Gregory Beylkin, who applied his expertise in numerical analysis to tackle an important problem in real-world phase retrieval that most of us have been ignoring: When you capture the Fourier transform (absolute value squared) of an object using an X-ray exposure (as Stefano Marchesini described), you don’t actually record this transform over the continuum. Instead, you sample it, and you need to sample it intelligently in order to accurately capture the information at hand. This is the role of good quadrature rules, in which Gregory is an expert. In particular, when making the (accurate) assumption that the object has compact support, the need arises for quadratures which are specifically designed for band-limited exponentials; here, polynomial quadratures fall short, since they have undesirable sample concentrations at endpoints. During the course of his talk, I was most intrigued by the following theorem, which he attributed to Constantin Caratheodory, and I restate in terms of matrices:

Theorem. For every nonzero vector , there exists a unique

along with a vector

of all positive entries and an

Vandermonde matrix

of

distinct unit-modulus bases such that

. Moreover, the representation is stable:

.

For a sketch of the proof, check out slide 18 from Gregory’s presentation. This theorem is one of those gems in math that I’d love to apply some day. Gregory uses similar analyses for his main result.

The experiment of swapping phases of the Fourier transforms of two images has also been documented in the book “Digital Image Processing” by Bernd Jähne (see http://books.google.de/books?id=5EShlIsf4TQC&printsec=frontcover&dq=j%C3%A4hne+phase&source=bl&ots=8wf4Nx5mOt&sig=-5cE-pIGvCo7w7Srjc5xyJ7_yfI&hl=de&sa=X&ei=lFR8UNGsAYyHswbD7YC4Dg&ved=0CDoQ6AEwAQ#v=onepage&q=j%C3%A4hne%20phase&f=false, page 58), however, I have no idea who deserves credit for this observation.

A non-rigorous explanation goes like this: The phase encodes the *position of edges* (obviously, a a global phase shift moves the whole image and hence all edges in the same way) and edges are probably the most important aid for visual interpretation. Moreover, images from different but comparable scenes usually have a quite similar power spectrum (i.e. magnitude of the Fourier transform) depending on the direction and strength of the main edges and hence, keeping magnitude and swapping phases basically swaps the “first visual impression”. I guess that one can construct an example in which you take the phase of the Fourier transform of an image and combine it with a totally different magnitude (not coming from an image) and obtain something which does not look like the original image anymore.

Well, it makes a lot of sense when you put it that way. With the help of eigenfaces, I bet we could easily determine how constant the power spectrum is from face to face, thereby forcing the phase to capture the facial characteristics we know and love. Some sort of pigeonhole argument with entropy…

Thank you for any other magnificent article. Where else may just anyone get that

kind of information in such an ideal method of writing?

I have a presentation next week, and I’m on the search for such information.

Wow, marvelous weblog structure! How long have you been blogging for?

you made blogging look easy. The total look of your site is magnificent, let alone the content!